10 - Security

Security: the protection of data againsts unauthorized disclosure, alteration, or destruction.

General Considertions

- Legal, social, and ethical aspects

- Physical controls

- Company policies

- Operational concerns

- Hardware controls

- Operating system security

- Data Ownership

Data Security

Threat: Any situation or event, whether intentional or unintentional that will adversly affect a system and consequently an organization.

Theft and fraud

- unauthorized amendment of data

- program alteration

- wire tapping

- illegal entry by hacker

- Wire tapping

- blackmail

- illegal entry by hacker

- inadequate, or ill thought out procedurers that allow confidential output to be mixed with normal output

- staff shortage or strikes

- inadequate staff training

- Inadequate staff training

- viewing unauthorized data and disclosing it

- electronic interference and radiation

- fire (electrical fault/lightning strike)

- fire (electrical fault/lightning strike)

- flood

E.g. Hardware failure resulting in a corrupt disk.

- Does alternative hardware exist that can be used?

- Is this alternative hardware secure?

- Can we legally run our software on this hardware?

- If no alternative hardware exists, how quickly can the problem be fixed?

- When were the last backups taken of the database and log files?

- Are the backups in a fireproof safe or offsite?

- If the most current database needs to be recreated by restoring the backup with the log files, how long will it take?

- Will there be any immediate effects on clients?

- If we restore the system will the same or similar breach of security occur again unless we do something to prevent it from happening?

- could our contingency planning be improved?

Computer Based Controls

The security of a DBMS is only as good as that of the operating system.Authorization:

the granting of a right or privilege which enables a subject to legitimately have acess to a system or object. (user ID)Authentication:

A mechanism by which a subject is determined to be the genuine subject that they claim to be. (passwords, login)

Privileges

- closed system (log in to the network + log into the db, more secure)

- open system

- Use of specific named databases

- selection or retrieval of data

- creation of tables and other objects

- update of data (may be restricted to certain columns)

- deletion of data (may be restricted to certain columns)

- insertion of data (may be restricted to certain columns)

- unlimited result set from a query (that is, a user is not restricted to a specified number of rows)

- execution of specific procedures and utility programs (stored procedures, access to views -- not tables)

- Creation of databases

- Creation (and modification) of DBMS user identifiers and other types of authorized identifiers

- Membership of a group of users, and consequent inheritance of the groups privileges

- Different ownership for different objects

- ownership of objects gives the owner all appropriate privileges on the objects owned

- newly created objects are automatically owned by their creator who gains the appropriate privileges for the object.

- privileges can be passed on to other authorized users

- DBMS maintains different types of authorization identifiers (users and groups)

- Dont generally give users access to objects (fils/folders) in a network.

- instead give access to a group

DBMS Support

Discretionary Control

- privileges or authorities on different objects

- policy decisions

- flexible

GRANT SELECT ON supplier TO charley

GRANT SELECT, UPDATE (status, city) ON supplier TO judy

GRANT ALL PRIVILEGES ON supplier TO ted

GRANT SELECT ON promotions TO public

GRANT dba TO phil

REVOKE SELECT ON supplier FROM charley

REVOKE UPDATE ON supplier FROM judy

- The above policy decision are inforced by the DBMS

- The systems catalog contains:

- sysuserlist

- sysauthlist

- note: checks slow the db down.

Mandatory Control

- Each object has a classification level (top secret, confidential, etc.)

- each user has a clearance level

- not supported by any current DBMS

- very rigid

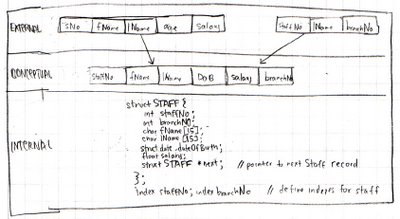

Views: can be used to represent only the data which is relevent to the user by effectively hiding other data (fields)

Backing up: The process of periodically taking a copy of the database and journal (and possibly programs) onto offline storage media.

- Frequency depends on how fast data changes.

- Various depending on size of data

- Must be enabled

- copies of journal on other disks

- record all security violations

- done in transaction log

- client server will record up the the failure

- DB like access will only record rom last backup

The point of synchronization between the database and the transaction log file. All buffers are force-written to secondary storage.

Encryption:

The encoding of the data by special algorithm that renders the data unreadable by any program without the decryption key.

- encrypt/decrypt

- degretation of performance

To ensure controls are effective:

Authorization and Authentication

- Password lengths

- Password duration

- Determine Procedures

- frequency

- Determine procedures

- Ensuring accuracy of input data

- Ensuring accuracy of data processing

- Prevention and detection of errors during program execution

- properly testing and documenting program development and maintenance

- avoiding unauthorized program alteration

- granting and monitoring access to data

- ensuring documentation is up to date

Establishment of a security policy:

- the area of the business it covers

- responsibilities and obligations of employees

- procedures that must be followed

- Who the key personnel are and how they can be contacted

- If key personnel are unavailable, a list of alternative personnel and how they can be contacted.

- Who decides that a contingency exists and how that is decided

- The technical requirements of transferring operations elsewhere

- Additional equipment needed

- will communication lines need to be installed

- operational requirements of transferring operation elsewhere

- staff needed to work away from home

- staff needed to work unusual hours

- staff will need to be compensated

- any outside contacts who may be help, for example:

- equipment manufacturer

- Whether any insurance exists to cover the situation

- Restrict access to printers especially if used for sensitive info. (confidential memo etc)

- Locate computer terminals sensibly if likely to display sensitive info. (screens visible from windows)

- site cabling to avoid damages

- Ensure a secure storage area is available on-site

- have an off-site secure storage area

- index all the material (list it)

- Internal controls: govern acces to areas within a building

- External controls: Govern access to the site

- cold site (empty rooms)

- warm site (rooms with computers)

- hot site (rooms with working and connected computers)

- Viruses (anti virus, patches etc.)

- Programs that pull random not consecutive data (eg census)

- get rid of the specific info, keep data that generalizes

- Preventing queries from operating on only a few database entries

- Randomly adding in additional entries to the original query result set, which produces a certain error but approximates to the true response

- using only a random sample of the database to answer the query

- maintaining a history of the query results and rejecting queries that use a high number of records identical to those used in previous queries.

- Establish a security team

- Define the scope of the analysis and obtain system details

- identify all existing countermeasures

- identify and evaluate all assets

- identify all assess all threats and risks (what can go wrong)

- Select countermeasures, undertake a cost/benefit analysis and compare with existing countermeasures

- make recomendations

- test security system

privacy: Concerns the right of an individual to not have personal info collected, stored and disclosed either willfully or indiscriminately.

data protection: The protection of personal data from unlawfull acquisition, storage and disclosure, and the provision of the necessary safeguards to avoid the destruction or corruption of the legitimage data held.

Since the 70's different countries have instituted different laws that deal with these issues

- post 9/11 privacy = less